You overheard your managers discussing whether or not the recent product launch was successful. The director of sales was very confident about his claims and calling it a success. The director of marketing was challenging the claims. Your new boss, the director of IT, is still trying to figure out what was happening since he just took over the role. You wanted to help your boss prove the claims but you have no idea about the product launch, let alone whether or not it was successful. Besides, you didn’t have a monitoring tool in place to check the database activity before, during and after the product launch. You decided to get back to your boring task of reviewing the backup jobs when an idea just appeared out of nowhere: why not use the database backups to analyze database activity?

What Your Database Backup Contains

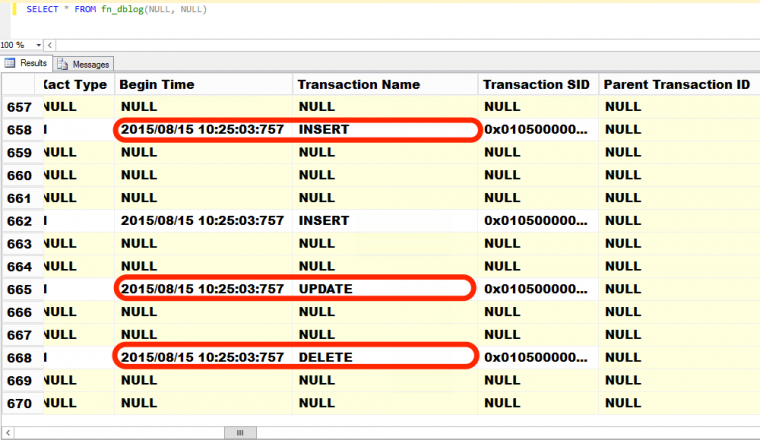

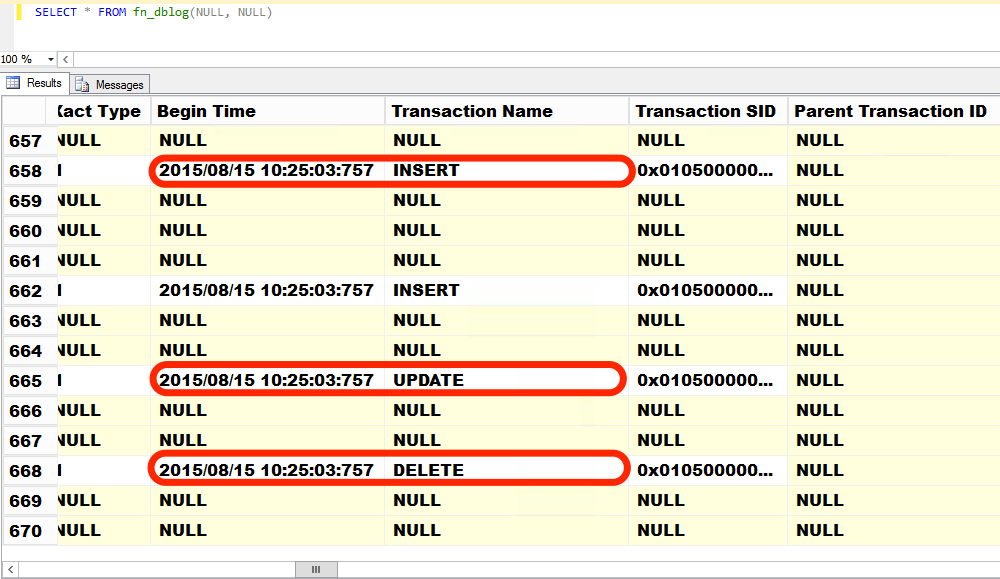

A full database backup contains a copy of your database when the backup was taken. This means you can restore your database to that point when the backup was taken. Unfortunately, it’s just a snapshot – it doesn’t tell you what happened and when it happened. But that’s what the transaction log is for. The transaction log keeps a record of all the transactions and modifications made in a database – what happened and when it happened. If you take regular transaction log backups, not only are you managing the log by constantly truncating it but also allowing you to recover the database to a specific point in time. Here’s a screenshot of what’s currently inside the transaction log using the undocumented function fn_dblog(), highlighting INSERT, UPDATE and DELETE commands executed against the database.

Now, understand that these records get truncated either in a checkpoint process (when your database is in SIMPLE recovery model) or in a transaction log backup (when your database is in FULL or BULK-LOGGED recovery model.) This means you can only read what is currently in the transaction log. So, if you want to analyze database activity during a specific period of time, you can’t just rely on the transaction log. And, this is where we can use our existing transaction log backups. Note that I am assuming that you configured both FULL and LOG backups immediately after the database was created. Otherwise, you’re out of luck.

Using the Transaction Log Backups To Analyze Database Activity

If you have transaction log backups, now is a good time to check if they are all kept intact and accessible. You’re actually going to perform a validation of your transaction log backup in this process so we’re hitting two birds with one stone here. Here’s how you can use your transaction log backups to analyze database activity.

- Identify the date range that you want to analyze. Choose a date range that you want to analyze. You can start from the time when the database was created. But that would be useless since you won’t have any transactions from that point. You can choose a starting date of about 6 hours prior to the main activity. In this case, 6 hours prior to product launch to maybe 7 days after the launch.

- List all of the transaction log backups that you want to analyze. You need to query the backup history tables in the msdb database to identify the transaction log backups that you need. You can use this sample query to list all of the transaction log backups that you need.

SELECT physical_device_name

FROM msdb.dbo.backupset a INNER JOIN msdb.dbo.backupmediafamily b

ON a.media_set_id = b.media_set_id

WHERE database_name='SampleDB'

--we only need the transaction log backups

AND TYPE='L'

--this is the date range that we specify to analyze the database transactions

AND backup_start_date BETWEEN '2011-05-18 00:00:00.000' AND '2011-05-25 00:00:00.000'

ORDER BY a.backup_start_date DESC

- Copy all of the transaction log backups identified from Step #2 to a test server. Once you have the list of transaction log backups you need from the backup history, copy them over to a test server. If the backups are already on tape, request a copy of those backups from your backup administrator. Now, you didn’t think I was going to run this on the production server, did you? I’m going to perform analysis on data that is no longer on the production database so I might as well just use a separate server for this purpose.

- Create a worktable on the test server to store the data. Because we will be reading transaction log records from the transaction log backups, we need a place to store the results so we can analyze it later on. Here’s a table schema that you can use to store the results.

USE tempdb

GO

CREATE TABLE fndumpdblog

(

DatabaseName NVARCHAR(50),

TransactionName NVARCHAR(50),

NumTransactions INT,

backupstartdate VARCHAR(20)

) - Generate dynamic SQL to analyze the transaction log backups. For this purpose, we will use the undocumented function fn_dump_dblog() to read contents of the transaction log backups. What we’re doing here is we’re running this query on the production server (not on the test server) to dynamically generate the SQL that contains the fn_dump_dblog() function using the information from the backup history table on msdb. You can use the query below to do so.

SELECT 'INSERT INTO fndumpdblog SELECT ''' + database_name + ''',[Transaction Name],

COUNT(*),' + '''' + CAST(backup_start_date AS CHAR(20)) + '''

FROM fn_dump_dblog(DEFAULT, DEFAULT,DEFAULT, DEFAULT,

--we need to copy the transaction log backups to the test server using this location or change this value later on to something else

''' + physical_device_name + ''',

DEFAULT,DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT)

where [Transaction Name] in (''INSERT'', ''UPDATE'',''DELETE'') group by [Transaction Name]'

FROM msdb.dbo.backupset a INNER JOIN msdb.dbo.backupmediafamily b

ON a.media_set_id = b.media_set_id

WHERE database_name='SampleDB'

--we only need the transaction log backups

AND TYPE='L'

--this is the date range that we specify to analyze the database transactions

AND backup_start_date BETWEEN '2011-05-18 00:00:00.000' AND '2011-05-25 00:00:00.000'

ORDER BY a.backup_start_date DESC

- Run the generated SQL on the test server. We need to copy the generated SQL from the production server to the test server because that’s where we will perform the analysis. The generated SQL looks something like this.Make sure that transaction log backups you copied exist on the C:\log_backups folder for the query to work. Otherwise, this will fail. This query will read the transaction log backups for records with [Transaction Name] value of INSERT, UPDATE or DELETE and aggregate them according to the date when the backup was taken. The results will then be inserted to the worktable we’ve created in Step #4.

INSERT INTO fndumpdblog SELECT 'SampleDB',[Transaction Name],

COUNT(*),'May 25 2011 5:40PM '

FROM fn_dump_dblog(DEFAULT, DEFAULT,DEFAULT, DEFAULT,

'C:\log_backups\SampleDB_log_201105251740.trn',

DEFAULT,DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT)

WHERE [Transaction Name] IN ('INSERT', 'UPDATE','DELETE') GROUP BY [Transaction Name]

Running the generated SQL on the test server will take some time. That’s because the fn_dump_dblog() function uses a lot of memory and I/O resources to read the transaction log backup files. Imagine what the impact would be like if you ran this query on your production server. That’s why it is recommended to run this on a separate test server.

In part 2 of this series, we will look at analyzing the results that we got from the fn_dump_dblog() function to determine database activity for a specified period of time – even without a monitoring tool in place.

Please note: I reserve the right to delete comments that are offensive or off-topic.